Notes on universal computationalism

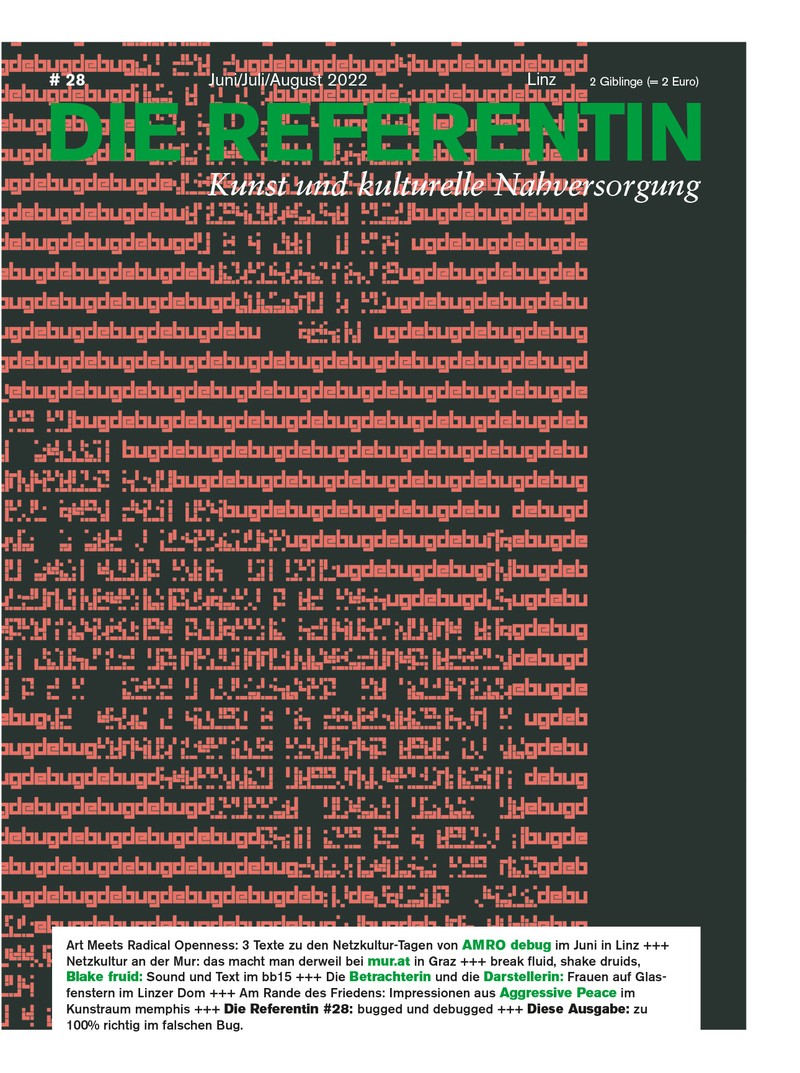

Die Referentin #28

What can encoded matter do? The text contributes to the AMRO22 DEBUG context. In this essay, GIA – the General Intelligence Agency (of Ljubljana) – tries to combine debugging as a theoretical concept with the pancomputationalist point of view. Within this new computational ontology, physics and philosophy become subsumed by a computer, and it’s this subsumption that produces the surplus for a new kind of statements about the world to emerge.

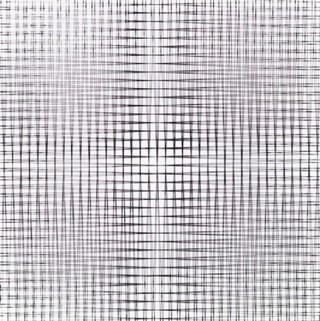

François Morellet, 2 doubles trames / 1° – 2°. Bild François Morellet

Let’s start by a provocative assertion that philosophy and (computer) programming are converging on the same plane. Even if we take into account the critiques of pancomputationalism and its uncanny similarity to the previous dominant model of the universe (of Descartes), where everything turns into a mechanism, we still believe this path produces a novel approach to doing philosophy (and philosophical problems) and outlines a new metaphysics for enhancing the capacity of the world at large. Proposing a new framework is easy. However, like Kuhn’s scientific revolutions, master theories should not only show the emergence of a new solution framework but also the problems thus solved. Problems with previously unreachable solutions, once available, legitimised the revolution. As we will see, in a computational universe it’s the encoding and embedding of logos in matter that is fundamental and it’s this procedure that is fully aligned with coding, debugging, deciphering, and so on.

So seeing bugs in society puts us squarely into this contemporary computational paradigm. Bugs are faults within existing solutions, but they can appear at different levels of abstraction. A key tenet of the open source bazaar is that with enough eyes all bugs are shallow. But generalising the notion of bugs allows for bugs within bug detection, even on the scale of many eyes. Surely you’ve noticed people claiming something is a bug while it was obvious to you that the phenomenon in question was a welcoming feature of modernity. On the flip side there might be occasions where it’s pragmatic to concede to a traditionalist that a particular potential bug is not reason enough to revolutionise an entire framework. Consider Maxwell, working within the mechanistic paradigm and annoyed at the “bug” of Newtonian action at a distance, insisted on conceiving of the electromagnetic field as a vast network of mechanical springs. Was he constrained by this buggy framework or was it the necessary condition for expressing his genius? In this sense it might be wise to embrace the computational hypothesis.

There’re many starting points to outline this approach, but perhaps the clearest and easily digestible one is found in constructor theory by David Deutsch and Chiara Marletto. One of the things that this approach does, is to show that in “constructor theory, physical laws are formulated only in terms of which tasks are possible (with arbitrarily high accuracy, reliability, and repeatability), and which are impossible, and why – as opposed to what happens, and what does not happen, given dynamical laws and initial conditions” (Marletto, 2015). By making this shift in understanding the possible, constructor theory advances the idea of natural laws as algebra of possibility, where possibility and realizability collapse into one another. Similarly, to the metamathematical problems of incomputability and/or “algorithmic information theory, which poses the complexity of an expression in terms of the shortest program able to produce it, identifying the information content of said expression as the length of the programme” (Cavia, 2022, p. 138), truth becomes “that […] which can be computed” (Bauer in Cavia, p. 96).

Thus, physics has been inserted into a computer, and it’s the programmers that are now running the show. What is true is what can be computed, i.e. by writing a program and finding a proof for a verification of a given proposition – a diverse account of computation which follows from the constructive view. Programming is thus a machine that cumulatively expands the field of possibility limited by its own incomputability and physical laws. Only what can be constructed, can be thought of as real, and everything else should be dismissed and discarded. Programming is thus not a static enterprise, a simple extension of already present axiomatics, but a dynamic enterprise of abductive reason(ing). Every program is an intelligent creation, and every proposition and proof thereof is a mining of something new into existence. To (re)formulate this within a slightly different language, programming is nothing more or less than encoding and embedding of logos into matter, and it’s this mechanisation of logos and logification of matter that should concern us. Actually, “It’s worth noting that Scott Aaronson thinks cryptography and learning are dual problems. From this perspective, trying to learn something is like trying to decrypt the world” (Wolfendale, 2017).

Decrypting is a kind of debugging. Detecting buggy effects in general is not the same as detecting bugs and both are obviously distinct from solving them. The first pair is an impetus for reflexivity and critique, the third has a problem-solution distance of its own. If bugs are like violations of rules we find similar asymmetries in other rule-governed situations. “It’s immeasurably harder to discover a novel mathematical proof than it is to check one. It’s far harder to write a great novel than it is to read one, and far harder to compose a brilliant song than to enjoy one. […] The lesson is that in asymmetric interactions the sender and receiver can play different roles. Consider the fact that it’s often (though not always) harder to learn something than it is to teach it” (ibid.). However, these tasks are all susceptible to second order bugs (another home for philosophical attention) that would misidentify bugs creating unnecessary but completable tasks or scheinprobleme (Carnap) with infinite problem-solution distances. An unsolvable problem either has infinite problem-solution distance or requires an impossibile composition – A missing step or a faulty attempt at composing the incomposable.

Philosophers from Plato to critical theories have undertaken plenty of tasks that we could understand as a kind of debugging, correcting faulty thought patterns and erecting systems of knowledge that would perpetuate enlightenment. Dominant cognitive frameworks were particularly attractive for them. Meanwhile, some cognitive archeologists speak of the problem-solution distance as a measure of technological complexity and thus of the appropriate cognitive apparatus needed to manage this complexity. This abstract approach allows us to think about bugs as well. Composition is the fundamental operation of constructing solutions. It is worth noting that a potential science of composition in general finds its approximation in category theory, which is currently inspiring many philosophers to take it seriously as a framework and just as Frege’s revolution inspired logical positivist to pursue the ideal of unifying all science we might await similar results from this new framework.

The interplay of the economic necessities and speculative freedoms of engineering have long taken their hold of the freedom of humanity. Its latest outburst is found within “crypto”. A freedom-affect is spreading throughout the so called web3 and regardless of what we may feel about its current state of politics or engineering, we should take it seriously. If philosophy is speculation in the realm of pure reason, speculation in the realm of machines is engineering. This kinship is why we welcome speculative engineering practices.

Within this new (computational) matrix of possibility, humanity has entered a new epoch of agential realisation. Our interaction(s) with matter were previously intransitive or forced upon (from the outside), where matter was subjected to the brute force manipulation, whereas now everything has become transitive. The external relation to material, energetic and informational processes has been internalised, and previous techniques were discarded in favour of a universal turing machine. “In that sense, the term ‘technology’ is not reduced to external academic or engineering ‘knowledge’ (lógos) about technique anymore, but extends to technical ‘thought’ itself, to the originary coupling of cognition and technique as machinery in its own right” (Ernst, 2021, p. 181). Objects have been replaced with processes, and within this new processual (media) ontology, everything, including speech or written information has become encoded and embedded in alphanumeric code and thus not simply inscribed but encrypted. In technologos, every event or a memory is a material reconstruction, and everything is instantiated by matter itself. Humanity has thus shifted from semantic technology of writing that doesn’t have the capacity to encode the real towards a much more dynamic way of inscription as information processing. “While alphabetic writing is still passive literature, alphanumeric coding becomes a speech act (so to say) in actual computing. […] As formal reasoning, lógos does not simply come into the machine, but inextricably is machine as operational matter.” (ibid., p. 3,20)

What are the speculative possibilities of this radically updated materialism? There are many, but let’s stay with the most fascinating example of a truly posthuman theory of deep futurology by Davor Löffler. As we have already established, we live within a computational universe, and we can construct ever better encoding and embedding of operational diagrams in matter, and thus perpetuate this process of cumulative abstraction ad infinitum. “For technical lógos in being, even abstraction of matter does not result in metaphysics, but in its operative re-entries. (ibid., p. 136) First of all, what (in constructor theory) is called a constructor is termed Platonic processual form in Löffler’s account. Through the problem-solution distance lens, everything goes through the process of ingression or world rendering, and everything needs to be produced – even things we now accept as givens like the past continuous tense or colour of blue. Nevertheless, this process is unfolding precisely because its potentials and constraints follow a pattern that can be realised and thus predicted and exploited – within this computational ontology, the ability to go meta becomes increasingly explicit. Thus, it’s no coincidence that Löffler was the first to state the following: “If this is true, that there is a matrix of possible worlds, then it means that we can find the structure of why these worlds are emerging and then we can ask, for example, what comes after the human, you see? And now we don’t have to speculate on the posthuman anymore, now we can scientifically ground it. By logic and science we can derive what could come after the human” (Löffler, 2021).

Sources

Cavia, A.A. (2022). Logicies: Six Seminars on Computational Reason. Berlin: The New Centre for Research & Practice.

Ernst, W. (2021). Technológos in Being: Radical Media Archaeology & the Computational Machine. London: Bloomsbury Publishing

Löffler, D. (2021, June 29th). The Meaning of Life. A Journey to the Origins of Worlds [Video]. Available at www.youtube.com/watch?v=s16ScBXRl1s

Marletto, C. (2015, July 15th). Life without design: Constructor theory is a new vision of physics, but it helps to answer a very old question: why is life possible at all?. Aeon. Available at aeon.co/essays/how-constructor-theory-solves-the-riddle-of-life

Wolfendale, P. (2017, December 22th). Transcendental Blues [Blog]. Available at deontologistics.co/2017/12/22/transcendental-blues

AMRO22 – Art Meets Radical Openness DEBUG

15th–18th June 2022

afo – architekturforum oberösterreich, Stadtwerkstatt, Kunstuniversität Linz, and more.

AMRO is a Festival dedicated to Art, Hacktivism and Open Culture. The current edition of Art Meets Radical Openness is dedicated to the rituals and the philosophies of debugging, which will be taken in AMRO22 as starting point for a conversation between artists, groups and communities moving together between the fields of culture, politics and technologies.

art-meets.radical-openness.org

Redaktionell geführte Veranstaltungstipps der Referentin

(2. Februar 2026)